Robotics enhanced by better AI, vision systems and edge computing

Industrial robotics continues to thrive and the cobots market alone is expected to see exponential growth throughout this year, after autonomous mobile robot sales rose by 42 per cent last year. But what robot trends can we expect to see in 2022? Here, Nigel Smith, CEO of industrial robot specialist TM Robotics, examines the latest developments in artificial intelligence (AI) and more.

One thing we can expect to see from robots in 2022 is greater flexibility. In a recent interview, Wendy Tan White, CEO of the robotics software company Intrinsic, said: "In the coming years, I'm excited to see more creativity and innovation emerge from the industrial robotics space. We're on the cusp of an industrial robotics renaissance, driven by software-first solutions, cheaper sensing, and more abundant data.”

Manufacturers are seeking to do more with robots. Purchasers expect smaller and more flexible designs that can fit easily into existing production lines, or for their existing robots to be easily repurposed and reassigned to tasks.

There will be growing demand for robots to function outside of normal manufacturing spaces, in other areas like logistics, warehouses or laboratories. Cobots, in particular, will continue to present greater possibilities for cooperation and collaboration with humans. A famous example is Amazon’s Kiva robots, which are roboticized pallet jacks that follow workers around the warehouse and support them in their tasks.

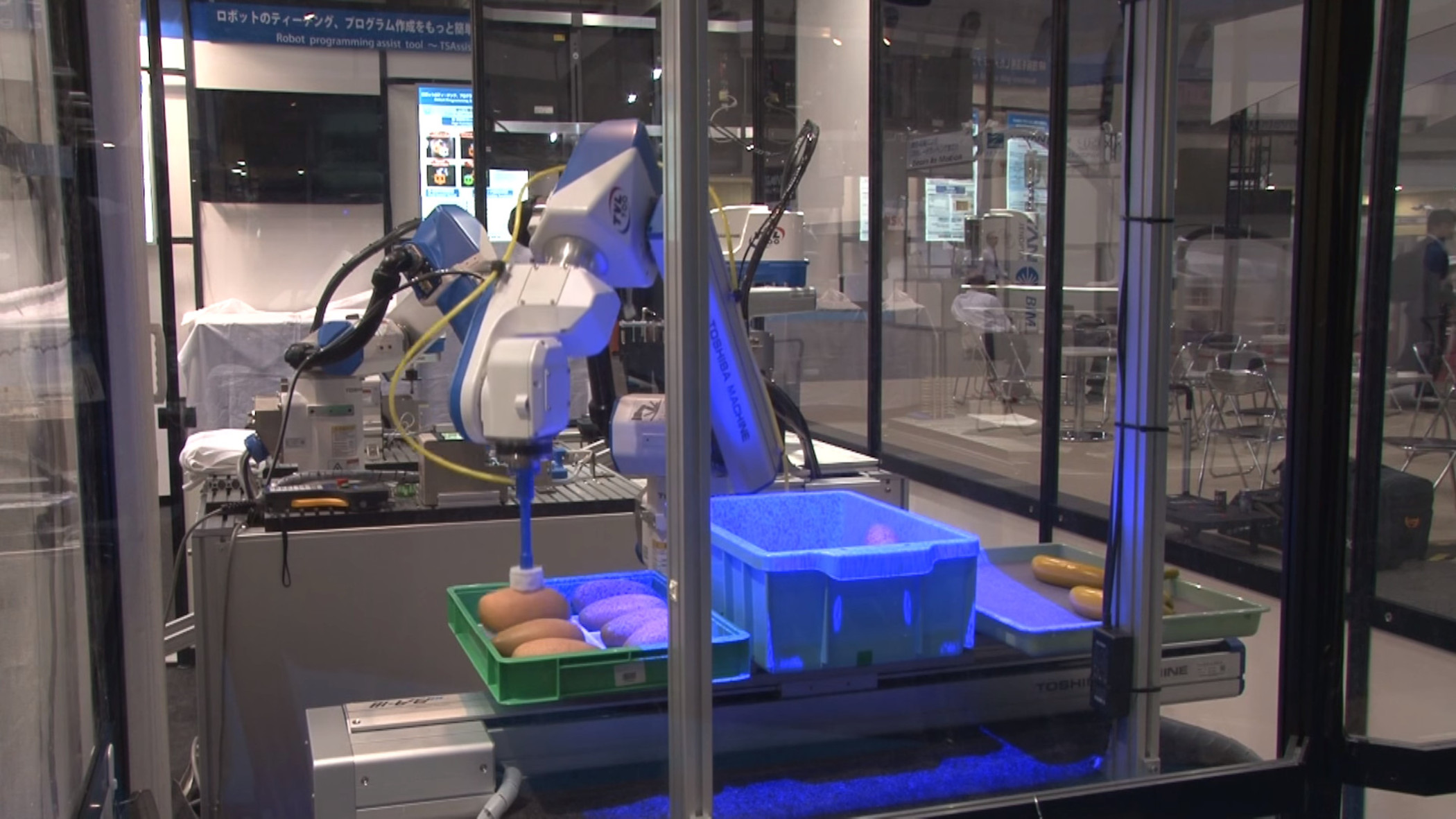

In 2022 and beyond, robots will become increasingly used to pick and move products in warehouses or around the production line. Other growth areas will include cobots that operate and tend to CNC machines and there are also increasing possibilities in welding applications. But are robots properly equipped to perform these varied roles?

Vision systems

One feature that will be integral to robots performing new tasks, like picking and moving products in warehouses, will be the increased use of 2D and 3D vision systems. Whereas “blind” robots ― or those without vision systems ― can complete simple repetitive tasks, robots with machine vision can react intuitively to their surroundings.

With a 2D system, the robot is equipped with a single camera. This approach is better-suited for applications where reading colours or textures is important, like barcode detection. 3D systems, on the other hand, evolved from spatial computing first developed at Massachusetts Institute of Technology (MIT) in 2003. They rely on multiple cameras to create a 3D model of the target object, and are especially suited for any task where shape or position are important, like bin picking.

Both 2D and 3D vision systems have a lot to offer in 2022. 3D systems, in particular, can overcome some of the errors 2D-equipped robots encounter when executing physical tasks, that would otherwise leave human workers with the task of diagnosing and solving the malfunction or resulting bottle-neck. Going forward, robots equipped with 3D vision systems have potential for reading barcodes and scanners, checking for defects such as in engine parts or wood quality, packaging inspections, checking the orientation of components and more.

The right pick

Wendy Tan White mentions “cheaper sensing, and more abundant data,” and 2022 will duly see the focus of robotics shift beyond sensor device hardware towards building AI that can help optimise the use of these sensors, and ultimately improve robot performance.

A combination of AI, machine vision, and machine learning are set to usher in the next phase of robotics. Expect to see more data management and augmented analytics systems that are all geared towards helping manufacturers achieve higher levels of operational excellence, resilience and cost-efficiency.

This will include combinations of machine vision with learning capabilities. Take precision bin picking applications, for instance ― one of the most sought-after tasks for robots. With previous robot systems, professional computer aided design (CAD) programming was needed to ensure the robot could recognise shapes. While these CAD systems could identify any given item in a bin, the system would run into issues if ― for example ― items appeared in random order during a bin picking task.

Instead, advanced vision systems use passive imaging, where photons are emitted or reflected by an object which then forms the image. The robot can then automatically detect items, whatever their shape or order.

An example of this is Shibaura Machine’s vision system, TSVision3D, which uses two high-speed cameras to continuously capture 3D images. Using intelligent software, the system can process these images and identify the exact position of an item. Through this process, the robot can determine the most logical order and pick up items with sub-millimetre accuracy, with the same ease as a human worker.

Robotics sees much potential in combining machine vision with robot learning in 2022 and beyond. Possible applications include vision-based drones, warehouse pick and place applications and robotic sorting or recycling.

Trial-and-error

With TSVision3D, we are seeing robot AI evolve to the point where it can interpret images as reliably as humans. Another key feature of this evolution is machine learning that allows robots to learn from mistakes and adapt.

One example is the DACTYL robotic system created by OpenAI, an artificial intelligence research laboratory founded by magnates Elon Musk and Sam Altman. With the DACTYL system, a virtual robotic hand learns through trial and error. This data is transferred to a real-life dextrous robotic hand and, through human-like learning, the robot is able to more-efficiently grasp and manipulate objects.

Otherwise known as deep learning, this process is the next step for robot AI in 2022. It’s hoped that through trial-and-error activities, as with the DACTYL system, robots will learn to perform more varied tasks in different environments.

Edge intelligence

Put simply, edge computing means moving data processing as close as possible to its source of origin in order to better obtain and prioritise data. Rather than “dumb” sensors, like standard microphones or cameras, this involves the use of smart sensors — like microphones equipped with language processing capabilities, humidity and pressure sensors, or cameras outfitted with computer vision.

Edge computing can combine with the technologies mentioned above. So, a robotic arm can take a reading through a smart sensor and 3D vision system, then send it to a server with a human machine interface (HMI) where a human worker can retrieve the data. With edge systems less data is sent to and from the cloud, which relieves network congestion and latency and allows computations to be performed more quickly. These Industry 4.0 innovations will complement the latest end-of-arm tooling hardware systems — like grippers for robots, or clamping systems for machining centres — which are getting more precise year-on-year.

To quote White, we can certainly expect to see “more creativity and innovation emerge from the industrial robotics space” in 2022. Improved vision systems, AI and edge systems can also combine to help ensure both manufacturers and their robots continue to thrive over the coming years.